Sage XRT Advanced

Introduction

The Templates installation provides you with a ready-to-use set of Views, Dashboards and Reports.

The installation (assuming the Central Point is freshly installed and empty), will consist of two steps:

- Configuring the Data Sources for the ERP and Data Warehouse (optional) databases.

- Importing the Templates into the Central Point.

There may be other steps including building and loading the OLAP Cubes.

Nectari DataSync

Sage XRT Advanced's data is only accessible from a Cloud platform, so it must be used in conjunction with DataSync.

Prerequisites

- A Sage XRT Advanced production environment

- A destination environment (Cloud or On Premise)

- A valid version of DataSync (refer to Installing DataSync for more information)

Set the Connections

You will need three Source Connections:

- One with the Tracking Type set to Date

- One with the Tracking Type set to None

- One that is identical to the Destination Connection (Data Warehouse) to perform Transformations

Settings in DataSync

- In DataSync, create a new Source Connection.

- In the list, select Custom API.

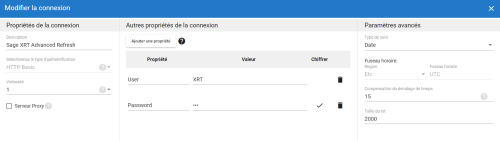

- In the Connection Properties window:

- In the Description field, enter a name for this connection.

- Choose theHTTP (Basic) value for Select Authentication Type

- Upload either CONN_2021R1.X.XXX_SXA Refresh.apip or CONN_2021R1.X.XXX_SXA NoRefresh.apip.

- You need a Source Connection for both tables that can load incrementally (i.e., Refresh) and those that cannot (i.e., NoRefresh).

- In Additional Connection Properties:

- Add a Visible Property, name it User and assign it the value of your Sage XRT Advanced User.

- Then, add an Encrypted Property, name it Password and assign it the value of your Sage XRT Advanced password.

- Click Save when finished.

- Create two Destination Connections—one for the Sage XRT Advanced Data Warehouse and one for the Nectari Central Point.

- Create another Source Connection for the Sage XRT Advanced Connection created in the Destination. This Source Connection will be used for transforming currency rates.

Importing the Extractions

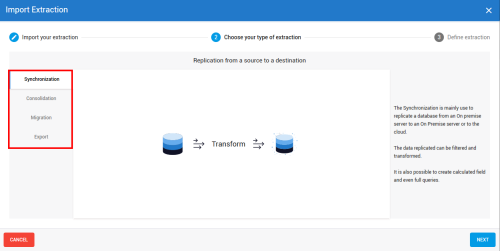

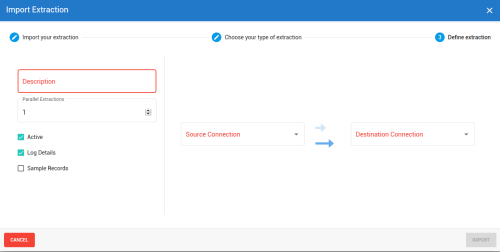

This feature allows you to import a pre-defined template or to restore a backup that you may have done yourself with the Export feature (refer to Export an Extraction for more details).

Some pre-defined templates are already available; if you don't have access to them, please contact your partner. An example of a pre-defined template you could use is the one which defines the list of tables and fields to be synchronized to send Sage 300, X3, Acumatica, Salesforce data to the Cloud.

- Click on one of the extractions in the list then on the Import icon located on the upper right-hand corner.

- In the Import Extraction window, click on the Choose a zip file hyperlink to browse to the location you saved the export .zip file or drag it directly into that window and click on Next.

For Sage XRT Advanced, you must import four zip files:

- DS_2021R1.X.XXX_SXA SYNC Refresh.zip – For Tables that have a valid Last Modified Date

-

DS_2021R1.X.XXX_SXA SYNC NoRefresh.zip – For Tables that do not have a valid Last Modified Date

-

DS_2021R1.X.XXX_SXA SYNC Username Import.zip – To Import the Users created in Sage XRT Advanced into the Nectari Central Point Database

-

DS_2021R1.X.XXX_SXA SYNC Rate Master.zip – To create missing Currency Rates (e.g., reversing the rate or creating 1-to-1 rates when the same currency is used on both sides)

-

This zip file must be imported after the NoRefresh Extraction has been built since it uses the CurrencyRate Table from that Extraction

-

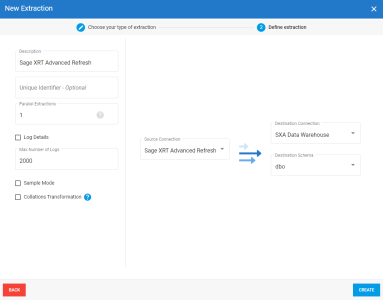

- Refer to Set Up the Extraction Panel to define the extraction and click on Import to finish the process.

Please be sure to perform the Extractions in the following order:

-

Sage SXA Refresh to Sage SXA Data Warehouse

-

Sage SXA NoRefresh to Sage SXA Data Warehouse

-

Sage SXA NoRefresh to Sage SXA Central Point (For Users)

-

Sage SXA Data Warehouse to Sage SXA Data Warehouse (For Rates)

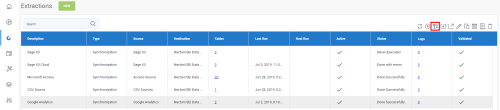

The Extractions window will automatically switch to the Tables window.

Refer to Add an SQL Query if you want to add SQL statements to some tables and Configuring the Field Section to customize the fields (add calculation, change destination name etc.)

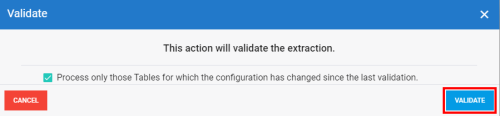

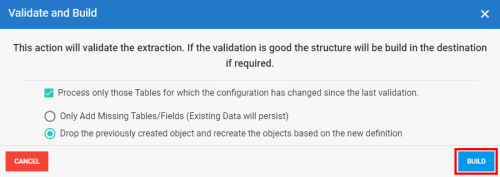

Validating and Building the Extractions

Once your extraction (source, destination connection and their related tables) is set up, the next step is to validate the setting of an extraction, before being able to run it.

The feature will:

- Ensure that all the tables/fields exist in the source connections,

- Validate all SQL queries or calculated fields,

- Ensure that the data integrity in the destination connection is not affected (ex: change the table structure).

To do so:

- Select the extraction you want to validate and build in the list and click on the Validate and Build icon.

- In the new window, choose the action which best fits your needs and click on Build (Validate for Migration and Export extraction types).

The choice will be different accordingly to the extraction type you select.

For Synchronization / Consolidation and extraction types:

For Migration and Export extraction types:

- Wait for the process to be done.

A Validation report window will appear to give you a quick overview on the process once it's finished. The results are displayed in the Status column and if there is an error, you will get more details by clicking on the hyperlink in the Error column which leads to Log Page.

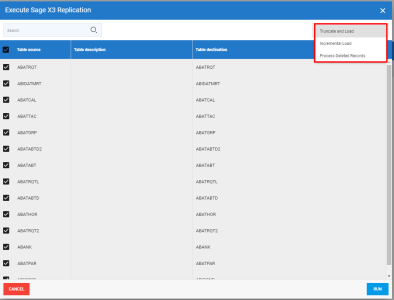

Running the Extractions

Once your data have been validated (refer to Validate and Build an Extraction for more details), you can manually run the extraction if you want an immediate result instead of scheduling it.

- Select the extraction you want to run in the list and click on the Run Extraction Now icon.

- In the upper-right hand corner, choose the action you want to execute and the table(s) then click on Run.

Load (for the Migration extraction type only): Loads all data in your destination from your source.

Truncate and Load: Replaces all data in your destination with the current data from your source.

Incremental Load: Retrieves only records that have changed since your last Incremental Load and replace their corresponding records in your destination with the updated ones.

Process Deleted Records: Maximum quantity of days for the validation process to check if records have been deleted based on the last changed date. i.e. If value is set to 30 days, the system will check all the transactions that were created or updated in the last 30 days and then validate if they still exist in the source. If they don't exist anymore in the source, they will be then deleted from the destination.

- Wait for the process to be done.

When the process is finished, the results are displayed in the Status column. If there is an error, you can view more details on it by clicking on the hyperlink in the Error column, which leads to the Log Page.

Data Source Configuration

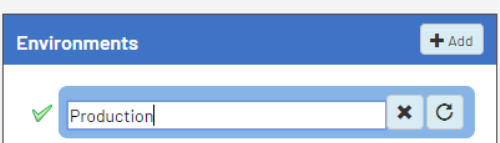

Environments and Data Sources

The description given to a Data Source created for the first time is used throughout the environments to describe this specific Data Source.

Give a generic description for the first time (e.g., ERP Data Source, Cube Data Source) and if necessary, rename it after the first environment has been created.

The following information is needed to configure the Data Sources:

- Database server credentials: Server name, Instance, Authentication strategy.

- Main ERP database information: Database and schema name.

ERP Data Source

- In the upper-right hand corner, click on the

to access the Administration section.

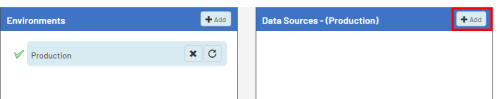

- On the left pane, select Env. & Data Sources.

- By default, there is already an environment called Production,

which you can rename by double-clicking in the name box. Once changed, press the Enter

key.

- In the Data Sources section, click on

Add to create the first Data Source.

- Complete the ERP Data Source configuration. See instructions for MS SQL Server below.

- Datasource description:

- Sage XRT Advanced Data Source

- Type:

- SQLSERVER

- Server:

- Database server of Sage XRT Advanced

- Database name:

- Name of the Sage XRT Advanced database (beware of the case sensitivity)

- Database schema name:

- Create the two following entries by clicking on the

icon (replace DatabaseName by the appropriate value):

- DatabaseName.FOLDER (replace FOLDER by the folder name)

- DatabaseName.NEC_FOLDER (replace FOLDER by the folder name)

NoteThis second line contains the Nectari Custom Schema.

You can use a different one, but we highly recommend following this naming convention:

- Start with NEC

- Use all capitals

- Separate words by an underscore

NoteThe application searches for tables in the same order as the schemas are listed. As soon as a table is found in any schema, the application will stop searching. Therefore, if you have multiple tables with the same name in different schemas, please ensure that the schema containing the table you want to use appears first.

ImportantChoose a unique Custom Schema name for each Environment.

- Nectari schema:

- Enter the chosen Nectari custom schema for the current environment

- Authentication stategy:

- UseSpecific

- User Name:

- SQL User accessing the Sage XRT Advanced database. For example, sa.

- Password:

- The user's password.

- Click on Validate then on Save to complete the configuration of the Data Source.

Importing Templates

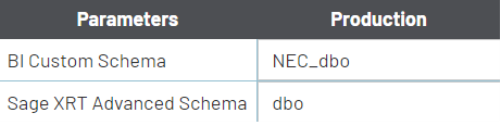

For each environment, the following previously configured information will be required:

- ERP Database Name

- Nectari Custom Schema

- ERP Schema

Download the Template file: TPL_2021R1.0.XXX_SXA.zip.

The X represents the build number of the template (use the highest available).

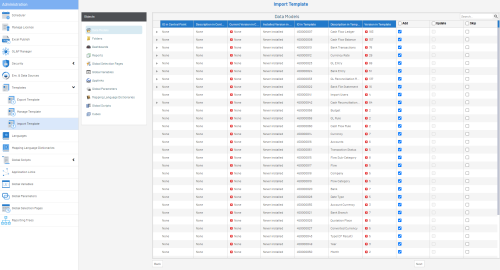

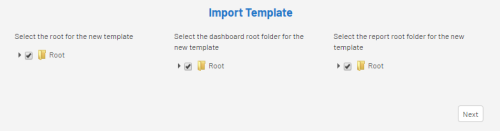

Running the Import Template

- In the upper-right hand corner, click on the

to access the Administration section.

- In the Administration section, click on the

Templates drop-down menu in the left pane.

- Select

Import Template.

- Choose the specific location where the new templates will be installed and click on Next.

Note

NoteUsually, the Root folder is used.

- In the Import Template window, click on Select files....

- Find the folder where you saved the Template.zip file in order to select it then click on Open.

- In the Data Sources Mapping screen, associate the Data Sources (ERP) listed in the Received Data Sources Description column (those from the template) with the Data Sources you previously defined in the Central Point (listed in the Current Data Sources Description column)

- In the Received Data Sources Description column, ensure that only the Data Sources checkboxes you want to use from the template are ticked off.

- In the Current Data Sources Description column, click on Bind a Data Source to access the drop-down list containing the existing Data

Sources and click on Next.

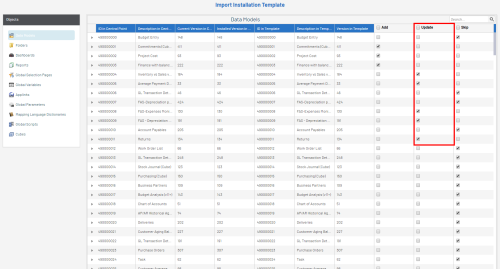

In the next screen all of the Templates content is displayed, against what the Central Point already has.

By default, on the first install, everything will be set to Add (leave everything by default) .

- In the case of a first installation, the first four columns will display None and Never Installed,

the next three will detail the Template content, and the last three gives you the choice to

Add, Update or Skip during the installation.Note

In the case of an update, you can refer to Updating template for more details.

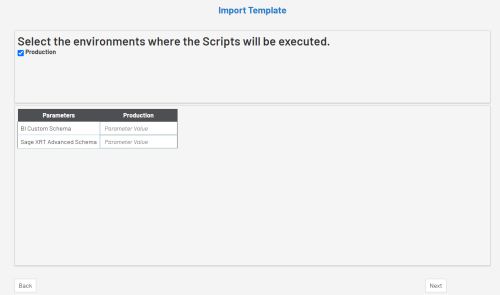

- Once this has been completed, a window will be prompted to input the necessary parameters to create the custom objects.

- If more than one Environment have been created, you will see a column per Environment. You can untick an Environment checkbox, in which case the Global Scripts will not run in it.

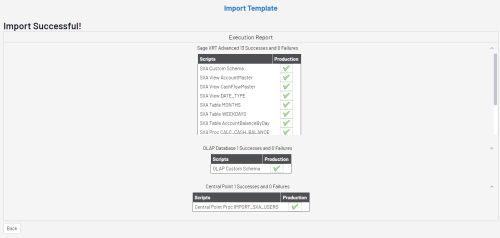

- After importing, an Execution Report will be produced, as shown below.Note

The first section is for the ERP Data Source and the one below it is for the Cube Data Source.

You can click on the

button to see the details of each script individually. If no failures are reported, close the window.

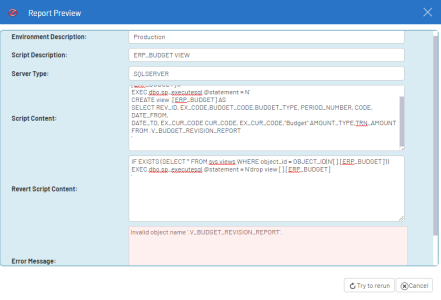

- If any of the scripts failed to run, a fail icon

will be displayed. Click on the fail symbol to view the Report Preview window, which shows the respective SQL script.

- Copy this script,

debug, and run it separately if needed. Users who are proficient with SQL can debug it

straight in the Report Preview window and run it by clicking on the Try to rerun button.

Configuring Excel Reports

The Sage XRT Advanced template includes several Excel Add-In reports, and those files must be configured to work with your data.

For more information on how to configure Excel Add-In files, please see Environment Configurations and Data Model Configurations.

Setting Up the OLAP Manager

In the OLAP Manager, there is a cube with a Stored Procedure to calculate the Account Balances of the current day as well as the day before. That cube was specifically created for the Scheduler to be able to regularly calculate Account Balances without having to perform them manually.

Upon each execution, the attached Stored Procedure deletes the balances from the current and previous day. Then, it uses the most recent balance in the Custom Table to add any missing transactions from that day and to calculate a new balance. Therefore, if any transactions were added to your Cash Ledger over one day ago, those transactions would likely not be taken into account until you re-calculate the older balances.

To do so, you must re-run the data manually by navigating to Cash, then Cash Flow Balance and finally, right-click on the entry and select View Info Pages.

Updating template

Some considerations you must take into account before starting:

- Making fresh backups of both the Nectari database and Central Point before doing a template update is highly recommended.

- Check the Nectari Data Models and Nectari custom SQL objects that may have been delivered with the initial template version, as you might lose these customizations upon updating.

- You must have a template version that matches the software version installed. If you are using Nectari 9, the template should be also 9.

When performing an upgrade of the Nectari software, it will only update the software and not the template. In other words, the existing Nectari Data Models and Views won't be affected.

After a software upgrade, it is not mandatory to systematically perform a template update. A template update is useful if you have encountered problems with specific Nectari Data Models or Nectari custom SQL objects as it includes fixes.

To update a template:

- After having mapped the Data sources, tick the checkboxes of the objects you want to upgrade and click on Next.

Note

NoteBy default, no checkbox in the Update column will be ticked. If there is a new Data Model / View the Add checkbox will be ticked. Select Skip if you want to ignore it.

ImportantIf you tick the Update checkbox, it will overwrite the existing Nectari objects associated with that Data Model or connected to the others (dependencies). Please note that if any customizations have been done, they will be lost.

- Select the environment in which the scripts will be executed and click on Next.

- Complete the parameters and click on Next.

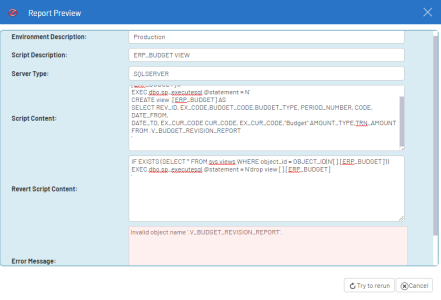

- In the Execution report window, If any of the scripts failed to run, a fail icon

will be displayed. Click on the fail symbol to view the Report Preview window, which shows the respective SQL script.

- Copy this script,

debug, and run it separately if needed. Users who are proficient with SQL can debug it

straight in the Report Preview window and run it by clicking on the Try to rerun button.

Web Browsers have updated their policy regarding Cookies and these changes must be applied to your Web Client if you want Nectari embedded into your ERP website, or use Single Sign-On (SSO). Refer to Cookie Management for more details.

Importing Users from Sage XRT Advanced

To import Users from Sage XRT Advanced, you must:

-

Run the Extraction for any previously added Users to sync the Users stored in the Nectari Database.

-

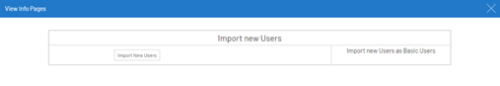

From the Nectari user interface, expand Import Users, then right-click on Import Users and select View Info Page (shown below).

-

Run the Info Page to import all Users from Sage XRT Advanced.